#Tor switch vs core switch series#

The series features an advanced hardware design combined with either 10 GE, 25 GE, or 50 GE access ports, and 40 GE, 100. Offering high performance, high port density, and low latency, CloudEngine 6800 series switches enable enterprises and carriers alike to build cloud-oriented data center networks. 40G and 100G ToR switches that can support multiple data rates are still not many. CloudEngine 6800 Series Data Center Switches. ToR switches are often required to be multiport and have low-latency since they have to deal with traffic in different layers.Īt present, 1G and 10G data rates still contribute to the largest portion of all switch-to-server connections. Of course its ‘best practice’ to put switches at the top of rack so I”m doubtful that anything will change.24x 10/100/1000BASE-T RJ45, 4x 1G RJ45/SFP ComboĢ0x 1G/10G SFP+, 4x 10G/25G SFP28, 2x 40G QSFP+Ģ4x 10/100/1000BASE-T RJ45, 16x 10G/25G SFP28Ĥ8x 25G SFP28, 2x 10Gb SFP+, 8x 100G QSFP28Īll these FS ToR switches support L2/元 features, IPv4/IPv6 dual stack, data center bridging and FCoE. Middle of rack would save most people money with few downsides. Take a walk around any co-lo facility and check how most racks have plenty of space. Most people don’t do the numbers like this so it’s rarely considered. Rule of thumb, will need at least two cables per server and 15 servers per rack, so 30 x 400 = $12000 per rack. Roughly a Cisco version is $400 and the 5M version is $800. If you choose to buy branded SFP modules, the cost difference becomes quite large. Over the last few years, the price on generic cables has dropped substantially :Ī third party 1M 25G SFP28 Passive DAC Twinax is ~$40.Ī 5M 25G SFP29 Passive DAC Twinax is ~$70.Ī lot of people won’t buy third party but it is my experience the ratio of 200% markup holds true. Another source of problems us “your budget, my budget” where its ok to spend someone else’s budget just not your own. OK yes, people are are worried but it is an imaginary problem of incompetent project managers or data centre managers. In reality very few companies fill their racks before reaching power/cooling limits so space allocation is not a widespread problem. Putting heavy mass at the top of a rack can be safety issue and may require floor spreaders. When you are using chassis-based servers which use 4RU or 8RU, you can run in to space allocation and weight problems. Where you are building your data centre a server at a time you might not know how many servers will end up in the rack. If, for some reason, you are using coax to connect to the core then TOR might be the only way to reach the Spine switches. It’s unlikely that these racks will ever be changed/upgraded before decommissioning as they are operating on a ‘cloud SRE’ model.Ī quick diagram shows how much shorter the cables will be.Īnother dependency is your uplink cabling. Such customers have their racks assembled and tested off site before shipping to the DC. Active coax is for cable runs over 3 metres (but no more than 10 metres). This enables the use of cheaper and more reliable passive coaxial Ethernet for 25G/50G. The middle of rack solution is common when building “rack at a time” data centres (or more likely ten or more racks at a time). What really happens when you add a ToR switch is that you get higher data rates closer to the servers, so there is an appearance that latency was decreased. I wonder if you ended doing that anywhere?

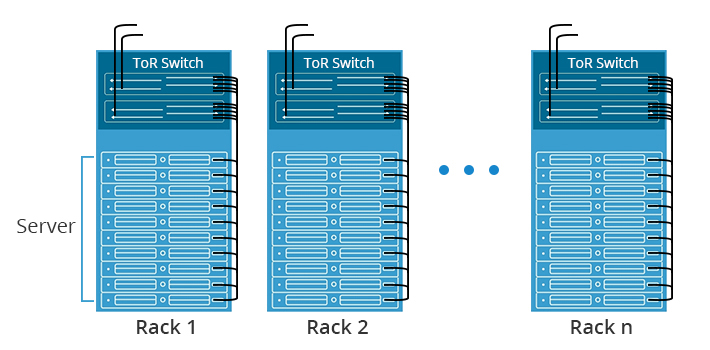

Cisco vWLC configuration coming next week Follow me on Twitte. Something I recall from one of your podcasts a long time ago was the option of moving to “Middle of the rack” switches, and using cheaper AUI connectors instead (I think it was one of your earlier podcasts – I started listening after a blog post). Today Im going to show you have I have my switches set up to trunk vlans between themselves. I received the following question from Gavan: At higher Ethernet speeds where the cabling costs are outsized there is sense to placing the switch in the middle of rack but there are tradeoffs. It’s common practice to place network switches at the top of the rack.

0 kommentar(er)

0 kommentar(er)